Klustron与Greenplum进行性能对比测试(TPCH)

Klustron与Greenplum进行性能对比测试(TPCH)

** 概述 **

通过使用业界流行的TPCH性能测试工具(TPC-H),针对Klustron-1.2 和Greenplum-6.24.5 分别进行测试,获得性能对比结论。第一次测试分别装入1GB数据,第二次测试各装入20GB数据。第一次测试对Klustron测试的部分数据量较大的表,分别使用单表和分区表;第二次测试对Klustron只使用分区表,没有使用单表。

两次测试中相同的步骤和操作不重复两遍,只分别列出有意义的不同内容。

测试环境一: (Greenplum)

Greenplum版本:6.24.5-rhel8-x86_64

| 节点类型 | IP | 端口 |

|---|---|---|

| Master | 192.168.0.20 | 5432 |

| Seg1节点 | 192.168.0.21 | 6000 |

| Seg2节点 | 192.168.0.22 | 6000 |

运行环境: CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

测试环境二 : (Klustron)

| 节点类型 | IP | 端口 |

|---|---|---|

| 计算节点 | 192.168.0.20 | 47001 |

| Shard1主节点 | 192.168.0.21 | 57003 |

| Shard2主节点 | 192.168.0.22 | 57005 |

| XPanel | 192.168.0.20 | 40580 |

运行环境:CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

测试环境三:( TPC-H )

TPC-H: v3.0.1

部署模式:单节点

运行环境:CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

IP: 192.168.0.20

备注:因本TPC-H测试是以单用户方式对目标库施压,不需要形成压测压力,固在两个测试场景中,都使用192.168.0.20 来发起TPCH相关的SQL查询。

01 Greenplum性能测试

1.1. 测试准备

1.1.1. Greenplum安装过程

《略》

1.1.2. 参数调整(在Master节点执行)

gpconfig -c shared_buffers -v 32GB

gpconfig -c statement_timeout -v 6000000

gpconfig -c lock_timeout -v 1200000

gpconfig -c log_min_duration_statement -v 1200000

gpconfig -c effective_cache_size -v 64GB

gpconfig -c work_mem -v 8MB

gpconfig -c wal_buffers -v 64MB

gpconfig -c autovacuum -v false

gpstop -M fast

gpstart

1.1.3. 创建测试用户及数据库

psql -h 192.168.0.20 -p5432 -d gpdw #Greenplum安装完成时创建了gpdw数据库

create user tpch with password 'tpch';

create database tpch with owner tpch ;

alter user tpch with superuser;

1.1.4. 进入TPC-H软件的工作目录

cd /home/gpadmin/tpch301/dbgen

1.1.5. 创建TPC-H相关的测试对象

cat << EOF > dss.ddl

-- Sccsid: @(#)dss.ddl 2.1.8.1

CREATE TABLE ORDERS ( O_ORDERKEY INTEGER NOT NULL,

O_CUSTKEY INTEGER NOT NULL,

O_ORDERSTATUS CHAR(1) NOT NULL,

O_TOTALPRICE DECIMAL(15,2) NOT NULL,

O_ORDERDATE DATE NOT NULL,

O_ORDERPRIORITY CHAR(15) NOT NULL,

O_CLERK CHAR(15) NOT NULL,

O_SHIPPRIORITY INTEGER NOT NULL,

O_COMMENT VARCHAR(79) NOT NULL)

DISTRIBUTED BY (O_ORDERKEY);

CREATE TABLE PARTSUPP ( PS_PARTKEY INTEGER NOT NULL,

PS_SUPPKEY INTEGER NOT NULL,

PS_AVAILQTY INTEGER NOT NULL,

PS_SUPPLYCOST DECIMAL(15,2) NOT NULL,

PS_COMMENT VARCHAR(199) NOT NULL)

DISTRIBUTED BY (PS_PARTKEY,PS_SUPPKEY);

CREATE TABLE CUSTOMER ( C_CUSTKEY INTEGER NOT NULL,

C_NAME VARCHAR(25) NOT NULL,

C_ADDRESS VARCHAR(40) NOT NULL,

C_NATIONKEY INTEGER NOT NULL,

C_PHONE CHAR(15) NOT NULL,

C_ACCTBAL DECIMAL(15,2) NOT NULL,

C_MKTSEGMENT CHAR(10) NOT NULL,

C_COMMENT VARCHAR(117) NOT NULL)

DISTRIBUTED BY (C_CUSTKEY);

CREATE TABLE PART ( P_PARTKEY INTEGER NOT NULL,

P_NAME VARCHAR(55) NOT NULL,

P_MFGR CHAR(25) NOT NULL,

P_BRAND CHAR(10) NOT NULL,

P_TYPE VARCHAR(25) NOT NULL,

P_SIZE INTEGER NOT NULL,

P_CONTAINER CHAR(10) NOT NULL,

P_RETAILPRICE DECIMAL(15,2) NOT NULL,

P_COMMENT VARCHAR(23) NOT NULL)

DISTRIBUTED BY (P_PARTKEY);

CREATE TABLE SUPPLIER ( S_SUPPKEY INTEGER NOT NULL,

S_NAME CHAR(25) NOT NULL,

S_ADDRESS VARCHAR(40) NOT NULL,

S_NATIONKEY INTEGER NOT NULL,

S_PHONE CHAR(15) NOT NULL,

S_ACCTBAL DECIMAL(15,2) NOT NULL,

S_COMMENT VARCHAR(101) NOT NULL)

DISTRIBUTED BY (S_SUPPKEY);

CREATE TABLE NATION ( N_NATIONKEY INTEGER NOT NULL,

N_NAME CHAR(25) NOT NULL,

N_REGIONKEY INTEGER NOT NULL,

N_COMMENT VARCHAR(152))

DISTRIBUTED BY (N_NATIONKEY);

CREATE TABLE REGION ( R_REGIONKEY INTEGER NOT NULL,

R_NAME CHAR(25) NOT NULL,

R_COMMENT VARCHAR(152))

DISTRIBUTED BY (R_REGIONKEY);

CREATE TABLE LINEITEM ( L_ORDERKEY INTEGER NOT NULL,

L_PARTKEY INTEGER NOT NULL,

L_SUPPKEY INTEGER NOT NULL,

L_LINENUMBER INTEGER NOT NULL,

L_QUANTITY DECIMAL(15,2) NOT NULL,

L_EXTENDEDPRICE DECIMAL(15,2) NOT NULL,

L_DISCOUNT DECIMAL(15,2) NOT NULL,

L_TAX DECIMAL(15,2) NOT NULL,

L_RETURNFLAG CHAR(1) NOT NULL,

L_LINESTATUS CHAR(1) NOT NULL,

L_SHIPDATE DATE NOT NULL,

L_COMMITDATE DATE NOT NULL,

L_RECEIPTDATE DATE NOT NULL,

L_SHIPINSTRUCT CHAR(25) NOT NULL,

L_SHIPMODE CHAR(10) NOT NULL,

L_COMMENT VARCHAR(44) NOT NULL)

DISTRIBUTED BY (L_LINENUMBER, L_ORDERKEY);

EOF

psql -h 192.168.0.20 -p5432 -Utpch -d tpch -f ./dss.ddl

cat << EOF > tpch-index

-- indexes on the foreign keys

CREATE INDEX IDX_SUPPLIER_NATION_KEY ON SUPPLIER (S_NATIONKEY);

CREATE INDEX IDX_PARTSUPP_PARTKEY ON PARTSUPP (PS_PARTKEY);

CREATE INDEX IDX_PARTSUPP_SUPPKEY ON PARTSUPP (PS_SUPPKEY);

CREATE INDEX IDX_CUSTOMER_NATIONKEY ON CUSTOMER (C_NATIONKEY);

CREATE INDEX IDX_ORDERS_CUSTKEY ON ORDERS (O_CUSTKEY);

CREATE INDEX IDX_LINEITEM_ORDERKEY ON LINEITEM (L_ORDERKEY);

CREATE INDEX IDX_LINEITEM_PART_SUPP ON LINEITEM (L_PARTKEY,L_SUPPKEY);

CREATE INDEX IDX_NATION_REGIONKEY ON NATION (N_REGIONKEY);

-- aditional indexes

CREATE INDEX IDX_LINEITEM_SHIPDATE ON LINEITEM (L_SHIPDATE, L_DISCOUNT, L_QUANTITY);

CREATE INDEX IDX_ORDERS_ORDERDATE ON ORDERS (O_ORDERDATE);

EOF

psql -h 192.168.0.20 -p5432 -Utpch -d tpch -f ./tpch-index

cat << EOF > tpch-pkeys

ALTER TABLE PART ADD PRIMARY KEY (P_PARTKEY);

ALTER TABLE SUPPLIER ADD PRIMARY KEY (S_SUPPKEY);

ALTER TABLE PARTSUPP ADD PRIMARY KEY (PS_PARTKEY, PS_SUPPKEY);

ALTER TABLE CUSTOMER ADD PRIMARY KEY (C_CUSTKEY);

ALTER TABLE ORDERS ADD PRIMARY KEY (O_ORDERKEY);

ALTER TABLE LINEITEM ADD PRIMARY KEY (L_ORDERKEY, L_LINENUMBER);

ALTER TABLE NATION ADD PRIMARY KEY (N_NATIONKEY);

ALTER TABLE REGION ADD PRIMARY KEY (R_REGIONKEY);

EOF

psql -h 192.168.0.20 -p5432 -Utpch -d tpch -f ./tpch-pkeys

1.1.6. 对测试工具进行参数配置及编译生成可执行程序

cp makefile.suite makefile

vi makefile

--------------------------------

CC = gcc

DATABASE= ORACLE

MACHINE = LINUX

WORKLOAD = TPCH

make

备注:注意确保已安装gcc相关的包

1.1.7. 生成测试数据并对测试数据进行预处理

./dbgen -vf -s 1 #生成1GB测试数据

vi trim-data.sh

----------------------------------

for i in `ls *.tbl`

do

sed 's/|$//' $i > ${i}.csv;

done

sh trim-data.sh

split -l 100000 -d lineitem.tbl.csv lineitem.tbl.csv-

1.1.8. 完成测试数据装载

psql -h 192.168.0.20 -p5432 -Utpch -d tpch

COPY customer(C_CUSTKEY,C_NAME,C_ADDRESS,C_NATIONKEY,C_PHONE,C_ACCTBAL,C_MKTSEGMENT,C_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/customer.tbl.csv' delimiter '|' ;

COPY nation(N_NATIONKEY,N_NAME,N_REGIONKEY,N_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/nation.tbl.csv' delimiter '|' ;

COPY orders(O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/orders.tbl.csv' delimiter '|' ;

COPY partsupp(PS_PARTKEY,PS_SUPPKEY,PS_AVAILQTY,PS_SUPPLYCOST,PS_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/partsupp.tbl.csv' delimiter '|' ;

COPY part(P_PARTKEY,P_NAME,P_MFGR,P_BRAND,P_TYPE,P_SIZE,P_CONTAINER,P_RETAILPRICE,P_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/part.tbl.csv' delimiter '|' ;

COPY region(R_REGIONKEY,R_NAME,R_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/region.tbl.csv' delimiter '|' ;

COPY supplier(S_SUPPKEY,S_NAME,S_ADDRESS,S_NATIONKEY,S_PHONE,S_ACCTBAL,S_COMMENT) FROM '/home/gpadmin/tpch301/dbgen/supplier.tbl.csv' delimiter '|' ;

vi load_lineitem.sh

-----------------------------------

for i in `ls lineitem.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 5432 -U tpch -d tpch -c "\copy lineitem from '/home/gpadmin/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_lineitem.sh

备注:此处需要上传dss.tgz到dbgen目录并解压

1.1.9. 测试数据说明

第一次测试,按前述dbgen指令生成测试数据1GB,且完成数据装载后,在Greenplum数据库中,8张表相应的数据记录行数统计如下:

Customer:150000

Orders: 1500000

Partsupp: 800000

Part: 200000

Region: 5

Supplier: 10000

Nation: 25

Lineitem: 6001215

第二次测试,按前述dbgen指令生成测试数据20GB,且完成数据装载后,在Greenplum数据库中,8张表相应的数据记录行数统计如下:

Customer:3000000

Orders: 30000000

Partsupp: 16000000

Part: 4000000

Region: 5

Supplier: 200000

Nation: 25

Lineitem: 119994608

1.1.10. 生成TPC-H测试SQL

mkdir query

more gen-sql.sh

---------------------------

for q in `seq 1 22`

do

DSS_QUERY=dss/templates ./qgen ${q} >> query/${q}.sql

sed 's/^select/explain select/' query/${q}.sql > query/${q}.explain.sql

cat query/${q}.sql >> query/${q}.explain.sql;

done

sh gen-sql.sh

1.1.11. 对装载对象进行分析

ana.sh

----------------------------

for i in `cat ./dss.ddl | grep 'CREATE TABLE' | grep -v partition | awk '{print $3}'`

do

echo "select * from $i limit 1;" > a.sql

clomun=`psql -h 192.168.0.20 -p 5432 -U tpch -d tpch -f a.sql | sed -n 1p | sed 's/|/,/g'`

analyze="analyze $i($clomun)"

echo $analyze

echo $analyze > tmp.sql

psql -h 192.168.0.20 -p 5432 -U tpch -d tpch -f tmp.sql

done

sh ana.sh

1.2. 运行TPC-H测试

mkdir run_log

more run_tpch.sh

--------------------------

for i in {1..22}

do

echo "begin run Q${i}, query/$i.sql , `date`"

begin_time=`date +%s.%N`

PGPASSWORD=tpch psql -h 192.168.0.20 -p 5432-d tpch -U tpch -f query/${i}.sql > ./run_log/log_q${i}.out

rc=$?

end_time=`date +%s.%N`

cost=`echo "$end_time-$begin_time"|bc`

total_cost=`echo "$total_cost+$cost"|bc`

if [ $rc -ne 0 ] ; then

printf "run Q%s fail, cost: %.2f, totalCost: %.2f, `date`\n" $i $cost $total_cost

else

printf "run Q%s succ, cost: %.2f, totalCost: %.2f, `date`\n" $i $cost $total_cost

fi

done

sh run_tpch.sh

备注:需要该节点已安装bc包:yum install bc

1.3. TPC-H测试结果

第一次测试(1GB)结果

| SQL序号 | 运行时长(秒) | 备注 |

|---|---|---|

| 1 | 3.10 | |

| 2 | 0.65 | |

| 3 | 1.12 | |

| 4 | 0.99 | |

| 5 | 1.21 | |

| 6 | 0.12 | |

| 7 | 1.10 | |

| 8 | 1.14 | |

| 9 | 1.48 | |

| 10 | 1.01 | |

| 11 | 0.27 | |

| 12 | 0.95 | |

| 13 | 0.53 | |

| 14 | 0.11 | |

| 15 | 0.22 | |

| 16 | 0.21 | |

| 17 | 1.96 | |

| 18 | 1.98 | |

| 19 | 0.16 | |

| 20 | 0.87 | |

| 21 | 1.98 | |

| 22 | 0.30 |

第二次测试(20GB)结果

| SQL序号 | 运行时长(秒) | 备注 |

|---|---|---|

| 1 | 40.34 | |

| 2 | 2.61 | |

| 3 | 8.24 | |

| 4 | 10.66 | |

| 5 | 9.08 | |

| 6 | 1.31 | |

| 7 | 7.32 | |

| 8 | 6.53 | |

| 9 | 14.33 | |

| 10 | 7.71 | |

| 11 | 1.41 | |

| 12 | 7.07 | |

| 13 | 7.28 | |

| 14 | 4.32 | |

| 15 | 10.19 | |

| 16 | 2.21 | |

| 17 | 73.04 | |

| 18 | 50.78 | |

| 19 | 0.84 | |

| 20 | 10.62 | |

| 21 | 24.15 | |

| 22 | 4.38 |

02 Klustron性能测试

2.1. 测试准备

2.1.1. 安装Klustron

<略> 使用 Klustron-1.2.1 版本

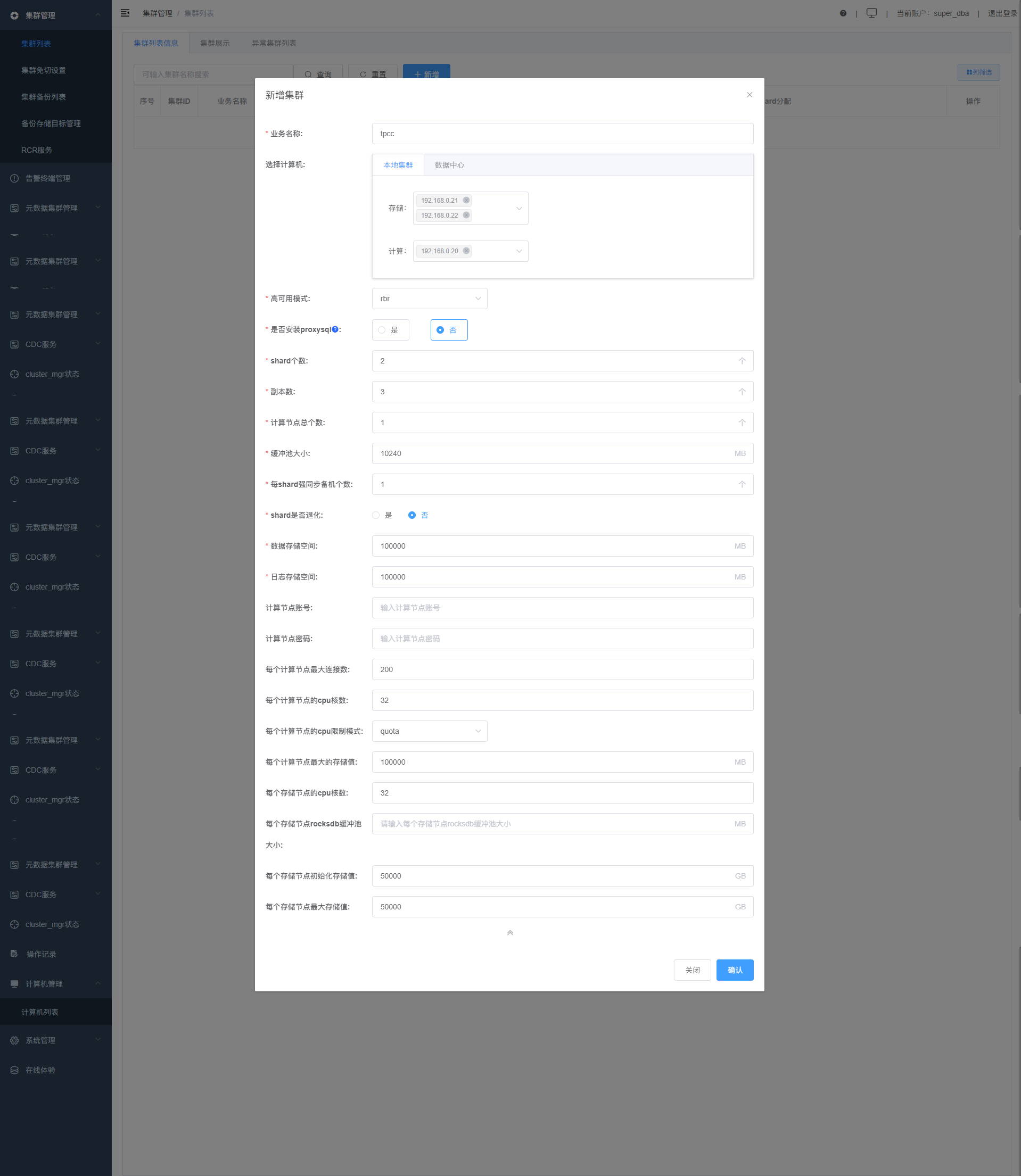

附用于测试创建的集群参数设定

2.1.2. 修改计算节点及存储节点的参数

计算节点(PG)重点参数:

psql -h 192.168.0.20 -p 47001 -U abc postgres

alter system set shared_buffers='32GB';

alter system set statement_timeout=6000000;

alter system set mysql_read_timeout=1200;

alter system set mysql_write_timeout=1200;

alter system set lock_timeout=1200000;

alter system set log_min_duration_statement=1200000;

alter system set effective_cache_size = '64GB';

alter system set work_mem = '8MB';

alter system set wal_buffers='64MB';

alter system set autovacuum=false;

alter system set log_min_messages='DEBUG1' ; #需要debug sql时开启

alter system set enable_sql_log=on; #需要debug sql 时开启

pg_ctl restart -D /nvme2/kunlun/server_datadir/47001

存储节点(MYSQL)重点参数:

innodb_buffer_pool_size=32*1024*1024*1024

lock_wait_timeout=1200

innodb_lock_wait_timeout=1200

fullsync_timeout=1200000

enable_fullsync=false

innodb_flush_log_at_trx_commit=2

sync_binlog=0

max_binlog_size=1*1024*1024*1024

mysql -h 192.168.0.21 -P 57003 -upgx -ppgx_pwd

mysql -h 192.168.0.22 -P 57005 -upgx -ppgx_pwd

set global innodb_buffer_pool_size=32*1024*1024*1024;

set global lock_wait_timeout=1200;

set global innodb_lock_wait_timeout=1200;

set global fullsync_timeout=1200000;

set global enable_fullsync=false;

set global innodb_flush_log_at_trx_commit=2;

set global sync_binlog=0;

set global max_binlog_size=1*1024*1024*1024;

set global enable_fullsync=off; #所有shard主节点关闭全同步

set enable_fullsync=off; #所有shard主节点关闭全同步

set global general_log=on ; #需要debug SQL时打开

备注:免切设置为10800秒(Xpanel)避免数据装载期间主节点异常导致节点切换

元数据集群MySQL RBR 3节点参数调整:

export LD_LIBRARY_PATH=/nvme2/kunlun/instance_binaries/storage/57001/Klustron-storage-1.2.1/lib:$LD_LIBRARY_PATH ;

/nvme2/kunlun/instance_binaries/storage/57001/Klustron-storage-1.2.1/bin/mysql --connect-expired-password -S/nvme2/kunlun/storage_logdir/57001/mysql.sock -uroot -proot

set global innodb_buffer_pool_size=1*1024*1024*1024; -- (缺省128M)

2.1.3. 在Klustron集群中创建测试数据库和测试用户

psql -h 192.168.0.20 -p 47001 -U abc postgres

create user tpch with password 'tpch';

create database tpch with owner tpch ;

grant pg_read_server_files to tpch ;

2.1.4. 进入TPC-H工作目录

cd /home/kunlun/tpch301/dbgen

2.1.5. 创建TPC-H相关的测试对象

cat << EOF > dss.ddl

-- Sccsid: @(#)dss.ddl 2.1.8.1

CREATE TABLE NATION ( N_NATIONKEY INTEGER NOT NULL,

N_NAME CHAR(25) NOT NULL,

N_REGIONKEY INTEGER NOT NULL,

N_COMMENT VARCHAR(152)) partition by hash(N_NATIONKEY);

create table NATION_0 partition of NATION for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table NATION_1 partition of NATION for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table NATION_2 partition of NATION for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table NATION_3 partition of NATION for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table NATION_4 partition of NATION for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table NATION_5 partition of NATION for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE REGION ( R_REGIONKEY INTEGER NOT NULL,

R_NAME CHAR(25) NOT NULL,

R_COMMENT VARCHAR(152)) partition by hash(R_REGIONKEY);

create table REGION_0 partition of REGION for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table REGION_1 partition of REGION for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table REGION_2 partition of REGION for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table REGION_3 partition of REGION for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table REGION_4 partition of REGION for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table REGION_5 partition of REGION for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE PART ( P_PARTKEY INTEGER NOT NULL,

P_NAME VARCHAR(55) NOT NULL,

P_MFGR CHAR(25) NOT NULL,

P_BRAND CHAR(10) NOT NULL,

P_TYPE VARCHAR(25) NOT NULL,

P_SIZE INTEGER NOT NULL,

P_CONTAINER CHAR(10) NOT NULL,

P_RETAILPRICE DECIMAL(15,2) NOT NULL,

P_COMMENT VARCHAR(23) NOT NULL ) partition by hash(P_PARTKEY);

create table PART_0 partition of PART for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table PART_1 partition of PART for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table PART_2 partition of PART for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table PART_3 partition of PART for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table PART_4 partition of PART for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table PART_5 partition of PART for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE SUPPLIER ( S_SUPPKEY INTEGER NOT NULL,

S_NAME CHAR(25) NOT NULL,

S_ADDRESS VARCHAR(40) NOT NULL,

S_NATIONKEY INTEGER NOT NULL,

S_PHONE CHAR(15) NOT NULL,

S_ACCTBAL DECIMAL(15,2) NOT NULL,

S_COMMENT VARCHAR(101) NOT NULL) partition by hash(S_SUPPKEY) ;

create table SUPPLIER_0 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table SUPPLIER_1 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table SUPPLIER_2 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table SUPPLIER_3 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table SUPPLIER_4 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table SUPPLIER_5 partition of SUPPLIER for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE PARTSUPP ( PS_PARTKEY INTEGER NOT NULL,

PS_SUPPKEY INTEGER NOT NULL,

PS_AVAILQTY INTEGER NOT NULL,

PS_SUPPLYCOST DECIMAL(15,2) NOT NULL,

PS_COMMENT VARCHAR(199) NOT NULL ) partition by hash(PS_PARTKEY,PS_SUPPKEY) ;

create table PARTSUPP_0 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table PARTSUPP_1 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table PARTSUPP_2 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table PARTSUPP_3 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table PARTSUPP_4 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table PARTSUPP_5 partition of PARTSUPP for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE CUSTOMER ( C_CUSTKEY INTEGER NOT NULL,

C_NAME VARCHAR(25) NOT NULL,

C_ADDRESS VARCHAR(40) NOT NULL,

C_NATIONKEY INTEGER NOT NULL,

C_PHONE CHAR(15) NOT NULL,

C_ACCTBAL DECIMAL(15,2) NOT NULL,

C_MKTSEGMENT CHAR(10) NOT NULL,

C_COMMENT VARCHAR(117) NOT NULL) partition by hash(C_CUSTKEY) ;

create table CUSTOMER_0 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table CUSTOMER_1 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table CUSTOMER_2 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table CUSTOMER_3 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table CUSTOMER_4 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table CUSTOMER_5 partition of CUSTOMER for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE ORDERS ( O_ORDERKEY INTEGER NOT NULL,

O_CUSTKEY INTEGER NOT NULL,

O_ORDERSTATUS CHAR(1) NOT NULL,

O_TOTALPRICE DECIMAL(15,2) NOT NULL,

O_ORDERDATE DATE NOT NULL,

O_ORDERPRIORITY CHAR(15) NOT NULL,

O_CLERK CHAR(15) NOT NULL,

O_SHIPPRIORITY INTEGER NOT NULL,

O_COMMENT VARCHAR(79) NOT NULL) partition by hash(O_ORDERKEY);

create table ORDERS_0 partition of ORDERS for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table ORDERS_1 partition of ORDERS for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table ORDERS_2 partition of ORDERS for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table ORDERS_3 partition of ORDERS for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table ORDERS_4 partition of ORDERS for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table ORDERS_5 partition of ORDERS for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

CREATE TABLE LINEITEM ( L_ORDERKEY INTEGER NOT NULL,

L_PARTKEY INTEGER NOT NULL,

L_SUPPKEY INTEGER NOT NULL,

L_LINENUMBER INTEGER NOT NULL,

L_QUANTITY DECIMAL(15,2) NOT NULL,

L_EXTENDEDPRICE DECIMAL(15,2) NOT NULL,

L_DISCOUNT DECIMAL(15,2) NOT NULL,

L_TAX DECIMAL(15,2) NOT NULL,

L_RETURNFLAG CHAR(1) NOT NULL,

L_LINESTATUS CHAR(1) NOT NULL,

L_SHIPDATE DATE NOT NULL,

L_COMMITDATE DATE NOT NULL,

L_RECEIPTDATE DATE NOT NULL,

L_SHIPINSTRUCT CHAR(25) NOT NULL,

L_SHIPMODE CHAR(10) NOT NULL,

L_COMMENT VARCHAR(44) NOT NULL) partition by hash(L_LINENUMBER, L_ORDERKEY);

create table LINEITEM_0 partition of LINEITEM for values with(MODULUS 6, REMAINDER 0) with (shard = 1);

create table LINEITEM_1 partition of LINEITEM for values with(MODULUS 6, REMAINDER 1) with (shard = 1);

create table LINEITEM_2 partition of LINEITEM for values with(MODULUS 6, REMAINDER 2) with (shard = 1);

create table LINEITEM_3 partition of LINEITEM for values with(MODULUS 6, REMAINDER 3) with (shard = 2);

create table LINEITEM_4 partition of LINEITEM for values with(MODULUS 6, REMAINDER 4) with (shard = 2);

create table LINEITEM_5 partition of LINEITEM for values with(MODULUS 6, REMAINDER 5) with (shard = 2);

EOF

psql -h 192.168.0.20 -p 47001 -U tpch tpch -f ./dss.ddl

cat << EOF > tpch-index

-- indexes on the foreign keys

CREATE INDEX IDX_SUPPLIER_NATION_KEY ON SUPPLIER (S_NATIONKEY);

CREATE INDEX IDX_PARTSUPP_PARTKEY ON PARTSUPP (PS_PARTKEY);

CREATE INDEX IDX_PARTSUPP_SUPPKEY ON PARTSUPP (PS_SUPPKEY);

CREATE INDEX IDX_CUSTOMER_NATIONKEY ON CUSTOMER (C_NATIONKEY);

CREATE INDEX IDX_ORDERS_CUSTKEY ON ORDERS (O_CUSTKEY);

CREATE INDEX IDX_LINEITEM_ORDERKEY ON LINEITEM (L_ORDERKEY);

CREATE INDEX IDX_LINEITEM_PART_SUPP ON LINEITEM (L_PARTKEY,L_SUPPKEY);

CREATE INDEX IDX_NATION_REGIONKEY ON NATION (N_REGIONKEY);

-- aditional indexes

CREATE INDEX IDX_LINEITEM_SHIPDATE ON LINEITEM (L_SHIPDATE, L_DISCOUNT, L_QUANTITY);

CREATE INDEX IDX_ORDERS_ORDERDATE ON ORDERS (O_ORDERDATE);

EOF

psql -h 192.168.0.20 -p 47001 -U tpch tpch -f ./tpch-index

cat << EOF > tpch-pkeys

ALTER TABLE PART ADD PRIMARY KEY (P_PARTKEY);

ALTER TABLE SUPPLIER ADD PRIMARY KEY (S_SUPPKEY);

ALTER TABLE PARTSUPP ADD PRIMARY KEY (PS_PARTKEY, PS_SUPPKEY);

ALTER TABLE CUSTOMER ADD PRIMARY KEY (C_CUSTKEY);

ALTER TABLE ORDERS ADD PRIMARY KEY (O_ORDERKEY);

ALTER TABLE LINEITEM ADD PRIMARY KEY (L_ORDERKEY, L_LINENUMBER);

ALTER TABLE NATION ADD PRIMARY KEY (N_NATIONKEY);

ALTER TABLE REGION ADD PRIMARY KEY (R_REGIONKEY);

EOF

psql -h 192.168.0.20 -p 47001 -U tpch tpch -f ./tpch-pkeys

2.1.6. 对测试工具进行参数配置及编译生成可执行程序

cp makefile.suite makefile

vi makefile

--------------------------------

CC = gcc

DATABASE= ORACLE

MACHINE = LINUX

WORKLOAD = TPCH

make

备注:注意确保已安装gcc相关的包

2.1.7. 生成测试数据并对测试数据进行预处理

./dbgen -vf -s 1 #生成1GB测试数据

vi trim-data.sh

----------------------------------

for i in `ls *.tbl`

do

sed 's/|$//' $i > ${i}.csv;

done

sh trim-data.sh

split -l 100000 -d lineitem.tbl.csv lineitem.tbl.csv-

说明:为保证测试数据一致,在获得 Klustron 的 TPCH 测试数据时,直接使用了前面 Greenplum 测试中 dbgen 生成的测试数据文件。

2.1.8. 第一次测试数据装载

第一次测试数据量不大,直接串行灌入数据。

psql -h 192.168.0.20 -p 47001 -U tpch -d tpch

COPY customer(C_CUSTKEY,C_NAME,C_ADDRESS,C_NATIONKEY,C_PHONE,C_ACCTBAL,C_MKTSEGMENT,C_COMMENT) FROM '/home/kunlun/tpch301/dbgen/customer.tbl.csv' delimiter '|' ;

COPY nation(N_NATIONKEY,N_NAME,N_REGIONKEY,N_COMMENT) FROM '/home/kunlun/tpch301/dbgen/nation.tbl.csv' delimiter '|' ;

COPY orders(O_ORDERKEY,O_CUSTKEY,O_ORDERSTATUS,O_TOTALPRICE,O_ORDERDATE,O_ORDERPRIORITY,O_CLERK,O_SHIPPRIORITY,O_COMMENT) FROM '/home/kunlun/tpch301/dbgen/orders.tbl.csv' delimiter '|' ;

COPY partsupp(PS_PARTKEY,PS_SUPPKEY,PS_AVAILQTY,PS_SUPPLYCOST,PS_COMMENT) FROM '/home/kunlun/tpch301/dbgen/partsupp.tbl.csv' delimiter '|' ;

COPY part(P_PARTKEY,P_NAME,P_MFGR,P_BRAND,P_TYPE,P_SIZE,P_CONTAINER,P_RETAILPRICE,P_COMMENT) FROM '/home/kunlun/tpch301/dbgen/part.tbl.csv' delimiter '|' ;

COPY region(R_REGIONKEY,R_NAME,R_COMMENT) FROM '/home/kunlun/tpch301/dbgen/region.tbl.csv' delimiter '|' ;

COPY supplier(S_SUPPKEY,S_NAME,S_ADDRESS,S_NATIONKEY,S_PHONE,S_ACCTBAL,S_COMMENT) FROM '/home/kunlunn/tpch301/dbgen/supplier.tbl.csv' delimiter '|' ;

vi load_lineitem.sh

#-----------------------------------

for i in `ls lineitem.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy lineitem from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_lineitem.sh

备注:此处需要上传dss.tgz到dbgen目录并解压

第二次测试中,由于测试装入数据了比较大,为了尽快完成装载,需要做并行装载。在装载过程中,对Klustron 存储shard主节点如下参数临时调整:

set global innodb_flush_log_at_trx_commit=0;

set global sync_binlog=0;

2.1.9. 第二次测试数据装载

第二次测试数据量较大,并行灌入数据。

psql -h 192.168.0.20 -p 47001 -U tpch -d tpch

-- 开启一个ssh 连接1到计算节点,执行如下指令:

COPY region(R_REGIONKEY,R_NAME,R_COMMENT) FROM '/home/kunlun/tpch301/dbgen/region.tbl.csv' delimiter '|' ;

COPY supplier(S_SUPPKEY,S_NAME,S_ADDRESS,S_NATIONKEY,S_PHONE,S_ACCTBAL,S_COMMENT) FROM '/home/kunlun/tpch301/dbgen/supplier.tbl.csv' delimiter '|' ;

COPY nation(N_NATIONKEY,N_NAME,N_REGIONKEY,N_COMMENT) FROM '/home/kunlun/tpch301/dbgen/nation.tbl.csv' delimiter '|' ;

# 开启一个ssh 连接2到计算节点,执行如下指令:

vi load_lineitem.sh

#-----------------------------------

for i in `ls lineitem.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy lineitem from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_lineitem.sh

# 开启一个ssh 连接3到计算节点,执行如下指令:

vi load_customer.sh

#-----------------------------------

for i in `ls customer.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy customer from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_customer.sh

# 开启一个ssh 连接4到计算节点,执行如下指令:

vi load_orders.sh

#-----------------------------------

for i in `ls orders.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy orders from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_orders.sh

# 开启一个ssh 连接5到计算节点,执行如下指令:

vi load_partsupp.sh

#-----------------------------------

for i in `ls partsupp.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy partsupp from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_partsupp.sh

# 开启一个ssh 连接6到计算节点,执行如下指令:

vi load_part.sh

#-----------------------------------

for i in `ls part.tbl.csv-*`

do

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -c "\copy part from '/home/kunlun/tpch301/dbgen/${i}' with delimiter as '|' NULL ' ';" ;

done

sh load_part.sh

备注:此处需要上传dss.tgz到dbgen目录并解压

2.1.10. 数据装载临时优化参数恢复

第二次测试中,灌完数据后恢复这两个参数的默认值。

set global innodb_flush_log_at_trx_commit=1;

set global sync_binlog=1;

2.1.11. Klustron并行查询参数优化

第二次测试做了如下的参数优化,以便有更好的性能。第一次测试中参数设置并没有为OLAP 优化资源分配。

当执行OLAP分析时,通常应用发起的连接数量并不多,但是每个语句执行所需的CPU和内存资源较大,所以需要在计算节点和存储节点中做适当设置以便达到最好的性能,按如下参数在计算节点及存储节点完成设置:

# 计算节点:

psql -h 192.168.0.20 -p 47001 -U abc postgres

alter system set max_parallel_workers_per_gather = 32;

alter system set max_parallel_workers = 128;

alter system set work_mem = ‘64MB’;

pg_ctl restart -D /nvme2/kunlun/server_datadir/47001

# 存储节点(shard主):

mysql -h 192.168.0.21 -P 57003 -upgx -ppgx_pwd

mysql -h 192.168.0.22 -P 57005 -upgx -ppgx_pwd

set global optimizer_switch='mrr_cost_based=off,batched_key_access=on';

set global join_buffer_size = 128*1024*1024;

set global sort_buffer_size = 64*1024*1024;

set global read_rnd_buffer_size = 64*1024*1024;

2.1.12. 生成TPC-H测试SQL

mkdir query

more gen-sql.sh

---------------------------

for q in `seq 1 22`

do

DSS_QUERY=dss/templates ./qgen ${q} >> query/${q}.sql

sed 's/^select/explain select/' query/${q}.sql > query/${q}.explain.sql

cat query/${q}.sql >> query/${q}.explain.sql;

done

sh gen-sql.sh

2.1.13. 对装载对象进行分析

ana.sh

----------------------------

for i in `cat ./dss.ddl | grep 'CREATE TABLE' | grep -v partition | awk '{print $3}'`

do

echo "select * from $i limit 1;" > a.sql

clomun=`psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -f a.sql | sed -n 1p | sed 's/|/,/g'`

analyze="analyze $i($clomun)"

echo $analyze

echo $analyze > tmp.sql

psql -h 192.168.0.20 -p 47001 -U tpch -d tpch -f tmp.sql

done

sh ana.sh

2.2. 运行TPC-H测试

mkdir run_log

more run_tpch.sh

--------------------------

for i in {1..22}

do

echo "begin run Q${i}, query/$i.sql , `date`"

begin_time=`date +%s.%N`

PGPASSWORD=tpch psql -h 192.168.0.20 -p 47001 -d tpch -U tpch -f query/${i}.sql > ./run_log/log_q${i}.out

rc=$?

end_time=`date +%s.%N`

cost=`echo "$end_time-$begin_time"|bc`

total_cost=`echo "$total_cost+$cost"|bc`

if [ $rc -ne 0 ] ; then

printf "run Q%s fail, cost: %.2f, totalCost: %.2f, `date`\n" $i $cost $total_cost

else

printf "run Q%s succ, cost: %.2f, totalCost: %.2f, `date`\n" $i $cost $total_cost

fi

done

sh run_tpch.sh

备注:需要该节点已安装bc包:yum install bc

2.3. TPC-H测试结果

第一次测试(1GB)结果

| SQL序号 | 运行时长(秒) | 备注 |

|---|---|---|

| 1 | 2.98 | |

| 2 | 104.96 | |

| 3 | 1.35 | |

| 4 | 0.81 | |

| 5 | 2.56 | |

| 6 | 0.31 | |

| 7 | 1.19 | |

| 8 | 2.34 | |

| 9 | 81.32 | |

| 10 | 0.93 | |

| 11 | 0.49 | |

| 12 | 0.84 | |

| 13 | 1.23 | |

| 14 | 0.24 | |

| 15 | 0.59 | |

| 16 | 1.16 | |

| 17 | 2.99 | |

| 18 | 11.18 | |

| 19 | 0.69 | |

| 20 | 14.44 | |

| 22 | 0.74 |

附:以上测试是对数据库对象进行了分区的,在此之前,单独测试了一轮对表不分区的方式,即由Klustron自由确定表所存放的shard,测试结果如下:

| SQL序号 | 运行时长(秒) | 备注 |

|---|---|---|

| 1 | 3.77 | |

| 2 | 0.42 | |

| 3 | 1.36 | |

| 4 | 2.43 | |

| 5 | 1.41 | |

| 6 | 0.35 | |

| 7 | 0.67 | |

| 8 | 2.00 | |

| 9 | 8.29 | |

| 10 | 2.82 | |

| 11 | 0.43 | |

| 12 | 1.19 | |

| 13 | 2.15 | |

| 14 | 0.23 | |

| 15 | 0.91 | |

| 16 | 1.61 | |

| 17 | 14.49 | |

| 18 | 4.28 | |

| 19 | 0.52 | |

| 20 | 1.95 | |

| 21 | 1.15 | |

| 22 | 0.43 |

第二次测试(20GB)结果

| SQL序号 | 运行时长(秒) | 备注 |

|---|---|---|

| 1 | 46.88 | |

| 2 | 1472.44 | |

| 3 | 28.57 | |

| 4 | 12.56 | |

| 5 | 55.08 | |

| 6 | 4.12 | |

| 7 | 18.54 | |

| 8 | 40.18 | |

| 9 | 307.81 | |

| 10 | 38.02 | |

| 11 | 8.36 | |

| 12 | 14.87 | |

| 13 | 33.47 | |

| 14 | 3.06 | |

| 15 | 9.82 | |

| 16 | 19.96 | |

| 17 | 170.33 | |

| 18 | 242.79 | |

| 19 | 11.89 | |

| 20 | 68.03 | |

| 21 | 501.67 | |

| 22 | 4.69 |

03 Klustron与Greenplum TPC-H测试结果汇总

第一次测试(1GB)结果对比

| SQL序号 | Klustron非分区 运行时长(秒) | Klustron分区 运行时长(秒) | Greenplum 运行时长(秒) |

|---|---|---|---|

| 1 | 3.77 | 2.98 | 3.10 |

| 2 | 0.42 | 104.96 | 0.65 |

| 3 | 1.36 | 1.35 | 1.12 |

| 4 | 2.43 | 0.81 | 0.99 |

| 5 | 1.41 | 2.56 | 1.21 |

| 6 | 0.35 | 0.31 | 0.12 |

| 7 | 0.67 | 1.19 | 1.10 |

| 8 | 2.00 | 2.34 | 1.14 |

| 9 | 8.29 | 81.32 | 1.48 |

| 10 | 2.82 | 0.93 | 1.01 |

| 11 | 0.43 | 0.49 | 0.27 |

| 12 | 1.19 | 0.84 | 0.95 |

| 13 | 2.15 | 1.23 | 0.53 |

| 14 | 0.23 | 0.24 | 0.11 |

| 15 | 0.91 | 0.59 | 0.22 |

| 16 | 1.61 | 1.16 | 0.21 |

| 17 | 14.49 | 2.99 | 1.96 |

| 18 | 4.28 | 11.18 | 1.98 |

| 19 | 0.52 | 0.69 | 0.16 |

| 20 | 1.95 | 14.44 | 0.87 |

| 21 | 1.15 | 365.06 | 1.98 |

| 22 | 0.43 | 0.74 | 0.30 |

第二次测试(20GB)结果对比

| SQL序号 | Klustron分区 运行时长(秒) | Greenplum 运行时长(秒) |

|---|---|---|

| 1 | 46.88 | 40.34 |

| 2 | 1472.44 | 2.61 |

| 3 | 28.57 | 8.24 |

| 4 | 12.56 | 10.66 |

| 5 | 55.08 | 9.08 |

| 6 | 4.12 | 1.31 |

| 7 | 18.54 | 7.32 |

| 8 | 40.18 | 6.53 |

| 9 | 307.81 | 14.33 |

| 10 | 38.02 | 7.71 |

| 11 | 8.36 | 1.41 |

| 12 | 14.87 | 7.07 |

| 13 | 33.47 | 7.28 |

| 14 | 3.06 | 4.32 |

| 15 | 9.82 | 10.19 |

| 16 | 19.96 | 2.21 |

| 17 | 170.33 | 73.04 |

| 18 | 242.79 | 50.78 |

| 19 | 11.89 | 0.84 |

| 20 | 68.03 | 10.62 |

| 21 | 501.67 | 24.15 |

| 22 | 4.69 | 4.38 |

结果分析

在2.3节中Klustron的计算节点的并行查询相关参数使用默认值,因此最多有两个工作进程执行同一个查询。未来我们还会使用32或者64个进程来再次跑TPCH测试,并且把结果补充到这个文档中。

第一次测试中,大多数测例,Klustron与Greenplum的时耗差别很小; 有个别测例,klustron的性能还有较大问题。第二次测试中数据量显著增大到20GB,可以看到KunlunBase OLAP分析性能还有需要改进的空间。我们会在后续版本解决。

由于TPC-H 都是只读测例,因此shard没有安装备机,并且修改了这几个配置参数:enable_fullsync=false,sync_binlog=0, innodb_flush_log_at_trx_commit = 2。这写改动对测试结果没有任何影响。

KunlunBase 在数据更新方面的性能远远好于 Greenplum,参考二者的TPCC 性能对比结果。