Klustron与Greenplum进行性能对比测试TPCC

Klustron与Greenplum进行性能对比测试TPCC

概述

通过使用业界流行的TPCC性能测试工具(BenchmarkSQL),针对Klustron-1.2 和Greenplum-6.24.5 分别进行测试,获得性能对比结论。

测试环境一: (Greenplum)

Greenplum版本:6.24.5-rhel8-x86_64

| 节点类型 | IP | 端口 |

|---|---|---|

| Master | 192.168.0.20 | 5432 |

| Seg1节点 | 192.168.0.21 | 6000 |

| Seg2节点 | 192.168.0.22 | 6000 |

运行环境: CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

测试环境二 : (Klustron)

| 节点类型 | IP | 端口 |

|---|---|---|

| 计算节点 | 192.168.0.20 | 47001 |

| Shard1主节点 | 192.168.0.21 | 57003 |

| Shard2主节点 | 192.168.0.22 | 57005 |

| XPanel | 192.168.0.20 | 40580 |

运行环境:CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

测试环境三:( BenchmarkSQL )

BenchmarkSQL: v5.0

部署模式:单节点

重点参数:

warehouses=50

terminals=100

runMins=30

newOrderWeight=45

paymentWeight=43

orderStatusWeight=4

deliveryWeight=4

stockLevelWeight=4

运行环境:CentOS 8.5 Linux 64位,CPU:AMD Ryzen 9 7950X 16-Core Processor, MEM: 128G, 存储:M.2 NVME SSD PCIE 4 固态 2T

IP: 192.168.0.19

备注:BenchmarkSQL所在的Linux 环境,需要安装好R,否则,测试结果报告中将不会显示图片分析结果

01 Greenplum性能测试

1.1. 测试准备

1.1.1. Greenplum安装过程

《略》

1.1.2. 参数调整(在Master节点执行)

gpconfig -c shared_buffers -v 32GB

gpconfig -c statement_timeout -v 6000000

gpconfig -c lock_timeout -v 1200000

gpconfig -c log_min_duration_statement -v 1200000

gpconfig -c effective_cache_size -v 64GB

gpconfig -c work_mem -v 64MB

gpconfig -c wal_buffers -v 64MB

gpconfig -c autovacuum -v false

gpconfig -c optimizer -v off

gpstop -M fast

gpstart

1.1.3. 调整BenchmarkSQL的配置,以适应Greenplum数据库的架构

修改BenchmarkSQL 的建表脚本(对表进行分区)

cd benchmarksql5.0/run/sql.common

cat << EOF > tableCreates.sql

create table bmsql_config (

cfg_name varchar(30) primary key,

cfg_value varchar(50)

);

create table bmsql_warehouse (

w_id integer not null,

w_ytd decimal(12,2),

w_tax decimal(4,4),

w_name varchar(10),

w_street_1 varchar(20),

w_street_2 varchar(20),

w_city varchar(20),

w_state char(2),

w_zip char(9)

) DISTRIBUTED BY (w_id);

create table bmsql_district (

d_w_id integer not null,

d_id integer not null,

d_ytd decimal(12,2),

d_tax decimal(4,4),

d_next_o_id integer,

d_name varchar(10),

d_street_1 varchar(20),

d_street_2 varchar(20),

d_city varchar(20),

d_state char(2),

d_zip char(9)

) DISTRIBUTED BY (d_w_id);

create table bmsql_customer (

c_w_id integer not null,

c_d_id integer not null,

c_id integer not null,

c_discount decimal(4,4),

c_credit char(2),

c_last varchar(16),

c_first varchar(16),

c_credit_lim decimal(12,2),

c_balance decimal(12,2),

c_ytd_payment decimal(12,2),

c_payment_cnt integer,

c_delivery_cnt integer,

c_street_1 varchar(20),

c_street_2 varchar(20),

c_city varchar(20),

c_state char(2),

c_zip char(9),

c_phone char(16),

c_since timestamp,

c_middle char(2),

c_data varchar(500)

) DISTRIBUTED BY (c_w_id);

create sequence bmsql_hist_id_seq;

create table bmsql_history (

hist_id integer,

h_c_id integer,

h_c_d_id integer,

h_c_w_id integer,

h_d_id integer,

h_w_id integer,

h_date timestamp,

h_amount decimal(6,2),

h_data varchar(24)

) DISTRIBUTED BY (h_c_w_id);

create table bmsql_new_order (

no_w_id integer not null,

no_d_id integer not null,

no_o_id integer not null

) DISTRIBUTED BY (no_w_id);

create table bmsql_oorder (

o_w_id integer not null,

o_d_id integer not null,

o_id integer not null,

o_c_id integer,

o_carrier_id integer,

o_ol_cnt integer,

o_all_local integer,

o_entry_d timestamp

) DISTRIBUTED BY (o_w_id);

create table bmsql_order_line (

ol_w_id integer not null,

ol_d_id integer not null,

ol_o_id integer not null,

ol_number integer not null,

ol_i_id integer not null,

ol_delivery_d timestamp,

ol_amount decimal(6,2),

ol_supply_w_id integer,

ol_quantity integer,

ol_dist_info char(24)

) DISTRIBUTED BY (ol_w_id);

create table bmsql_item (

i_id integer not null,

i_name varchar(24),

i_price decimal(5,2),

i_data varchar(50),

i_im_id integer

);

create table bmsql_stock (

s_w_id integer not null,

s_i_id integer not null,

s_quantity integer,

s_ytd integer,

s_order_cnt integer,

s_remote_cnt integer,

s_data varchar(50),

s_dist_01 char(24),

s_dist_02 char(24),

s_dist_03 char(24),

s_dist_04 char(24),

s_dist_05 char(24),

s_dist_06 char(24),

s_dist_07 char(24),

s_dist_08 char(24),

s_dist_09 char(24),

s_dist_10 char(24)

) DISTRIBUTED BY (s_w_id);

alter table bmsql_warehouse add constraint warehouse_pkey primary key (w_id);

alter table bmsql_district add constraint district_pkey primary key (d_w_id, d_id);

alter table bmsql_customer add constraint customer_pkey primary key (c_w_id, c_d_id, c_id);

create index bmsql_customer_idx1 on bmsql_customer (c_w_id, c_d_id, c_last, c_first);

alter table bmsql_oorder add constraint oorder_pkey primary key (o_w_id, o_d_id, o_id);

create unique index bmsql_oorder_idx1 on bmsql_oorder (o_w_id, o_d_id, o_carrier_id, o_id);

alter table bmsql_new_order add constraint new_order_pkey primary key (no_w_id, no_d_id, no_o_id);

alter table bmsql_order_line add constraint order_line_pkey primary key (ol_w_id, ol_d_id, ol_o_id, ol_number);

alter table bmsql_stock add constraint stock_pkey primary key (s_w_id, s_i_id);

alter table bmsql_item add constraint item_pkey primary key (i_id);

EOF

mv indexCreates.sql indexCreates.sql_old

mv foreignKeys.sql foreignKeys.sql.bak

修改:/home/gpadmin/benchmarksql-5.0/run/sql.postgres/extraHistID.sql ,将

```sql

alter table bmsql_history add primary key (hist_id);

修改为:

alter table bmsql_history add primary key (hist_id,h_c_w_id);

mv /home/kunlun/benchmarksql-5.0/run/sql.postgres/buildFinish.sql /home/kunlun/benchmarksql-5.0/run/sql.postgres/buildFinish.sql.bak

1.1.4. 创建测试用户及数据库

psql -h 192.168.0.20 -p5432 -d gpdw #Greenplum安装完成时创建了gpdw数据库

create user bench with password ‘bench’;

create database bench with owner bench ;

alter user bench with superuser;

1.1.5. 修改benchmarksql5.0/run/props.pg的参数

db=bench

driver=org.postgresql.Driver

conn=jdbc:postgresql://192.168.0.20:5432/bench

user=bench

password=bench

warehouses=50

loadWorkers=50

terminals=100

//To run specified transactions per terminal- runMins must equal zero

runTxnsPerTerminal=0

//To run for specified minutes- runTxnsPerTerminal must equal zero

runMins=30

//Number of total transactions per minute

limitTxnsPerMin=0

//Set to true to run in 4.x compatible mode. Set to false to use the

//entire configured database evenly.

terminalWarehouseFixed=false

//The following five values must add up to 100

//The default percentages of 45, 43, 4, 4 & 4 match the TPC-C spec

newOrderWeight=45

paymentWeight=43

orderStatusWeight=4

deliveryWeight=4

stockLevelWeight=4

// Directory name to create for collecting detailed result data.

// Comment this out to suppress.

resultDirectory=my_result_%tY-%tm-%td_%tH%tM%tS

osCollectorScript=./misc/os_collector_linux.py

osCollectorInterval=1

osCollectorSSHAddr=gpadmin@192.168.0.21

osCollectorDevices=net_enp4s0 blk_ nvme1n1

注:对Greenplum所运行的集群机器的存储节点之一进行资源监控配置,在BenchmarkSQL配置文件(props.pg)中设置资源监控,搜集压测中的CPU,存储IO,网络IO相关的负载参数, 关键参数:osCollectorSSHAddr=gpadmin@192.168.0.21 #需要配置ssh免密访问

osCollectorDevices=net_enp4s0 blk_ nvme1n1 #需要依目标机的网卡接口名及存储设备名调整名称。

1.1.6. 执行测试数据生成

cd benchmarksql5.0/run/

./runDatabaseBuild.sh props.pg

1.1.7. 对测试数据表及列做一次分析,收集统计信息

将 tableCreates.sql拷贝至Master节点,并在当前目录下编辑一个名为ana.sh的脚本,如下:

for i in `cat ./tableCreates.sql | grep 'create table' | grep -v partition | awk '{print $3}'`

do

echo "select * from $i limit 1;" > a.sql

clomun=`psql -h 192.168.0.20 -p 5432 -U bench bench -f a.sql | sed -n 1p | sed 's/|/,/g'`

analyze="analyze $i($clomun)"

echo $analyze

echo $analyze > tmp.sql

psql -h 192.168.0.20 -p 5432 -U bench bench -f tmp.sql

done

运行该脚本完成对所有测试表的统计分析。

1.2. 进行测试

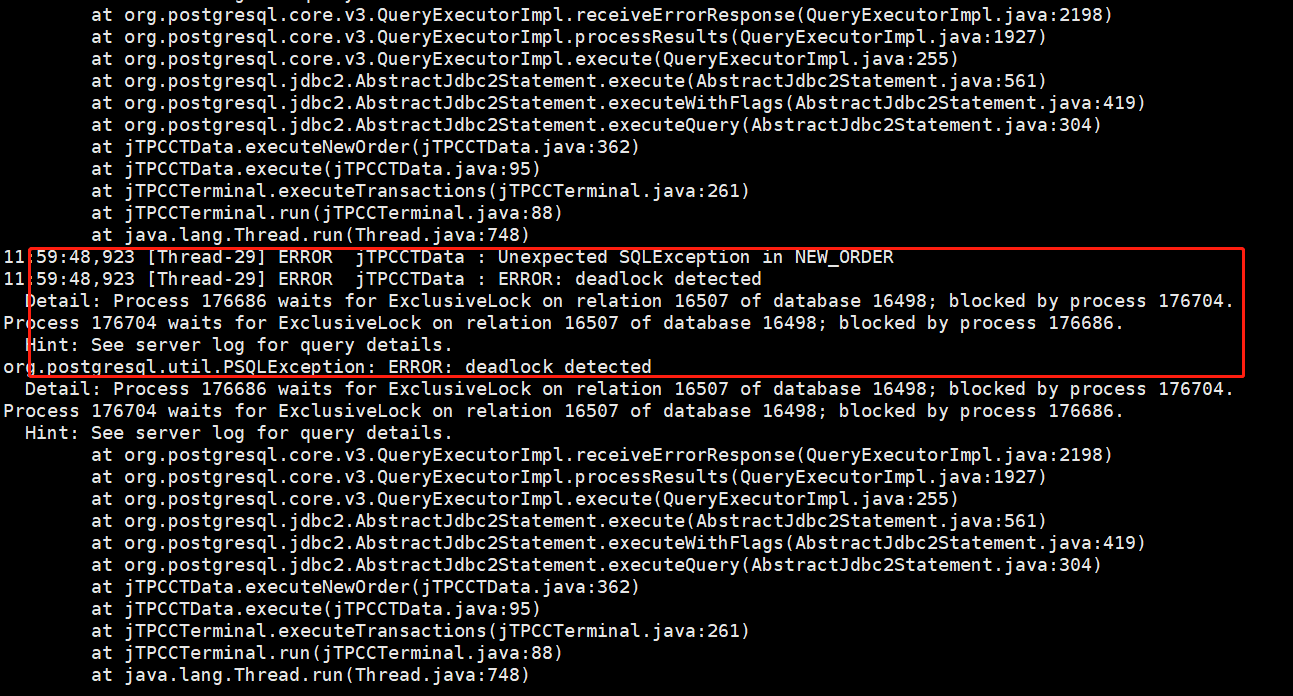

执行 runBenchMark.sh props.pg, 运行测试,压测完成后,在当前目录会生成类似my_result_2023-07-25_112947 的目录。测试运行中,Greenplum在此测试场景下,除了输出正常的log信息外,有死锁提示信息持续输出,截图如下所示:

1.3. 测试报告生成及结果阅读

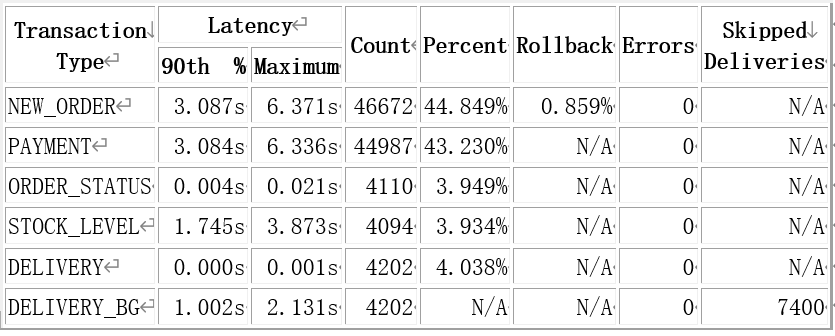

执行generateReport.sh < my_result目录>将生成html分析报告,以当前配置的压测用。

(warehouses=50,terminals=100,runMins=30**)**在报告文件中获得的关键输出信息如下:

| Overall tpmC: | 1555.73 |

|---|---|

| Overall tpmTotal: | 3468.83 |

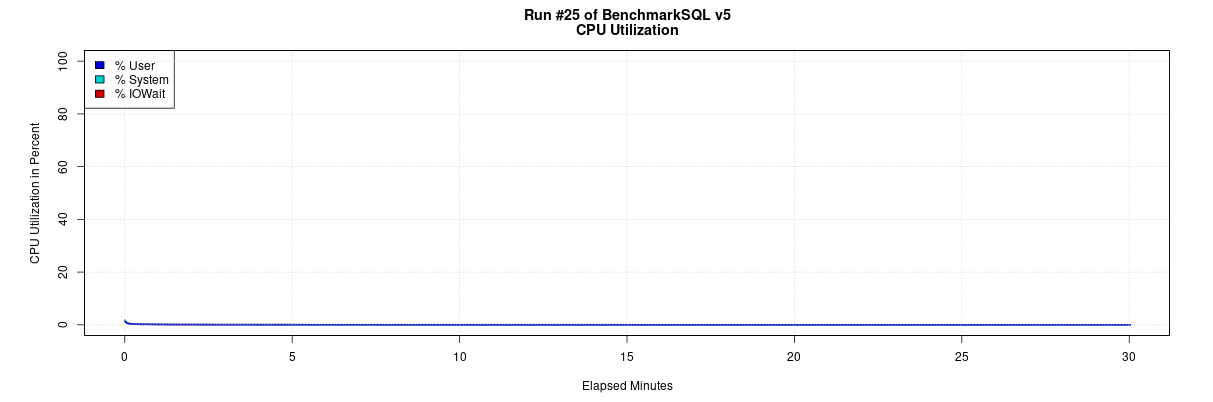

Greenplum集群存储节点1所在机器的负载监测情况如下:

_

02 Klustron性能测试

2.1. 测试准备

2.1.1. 安装Klustron

<略>

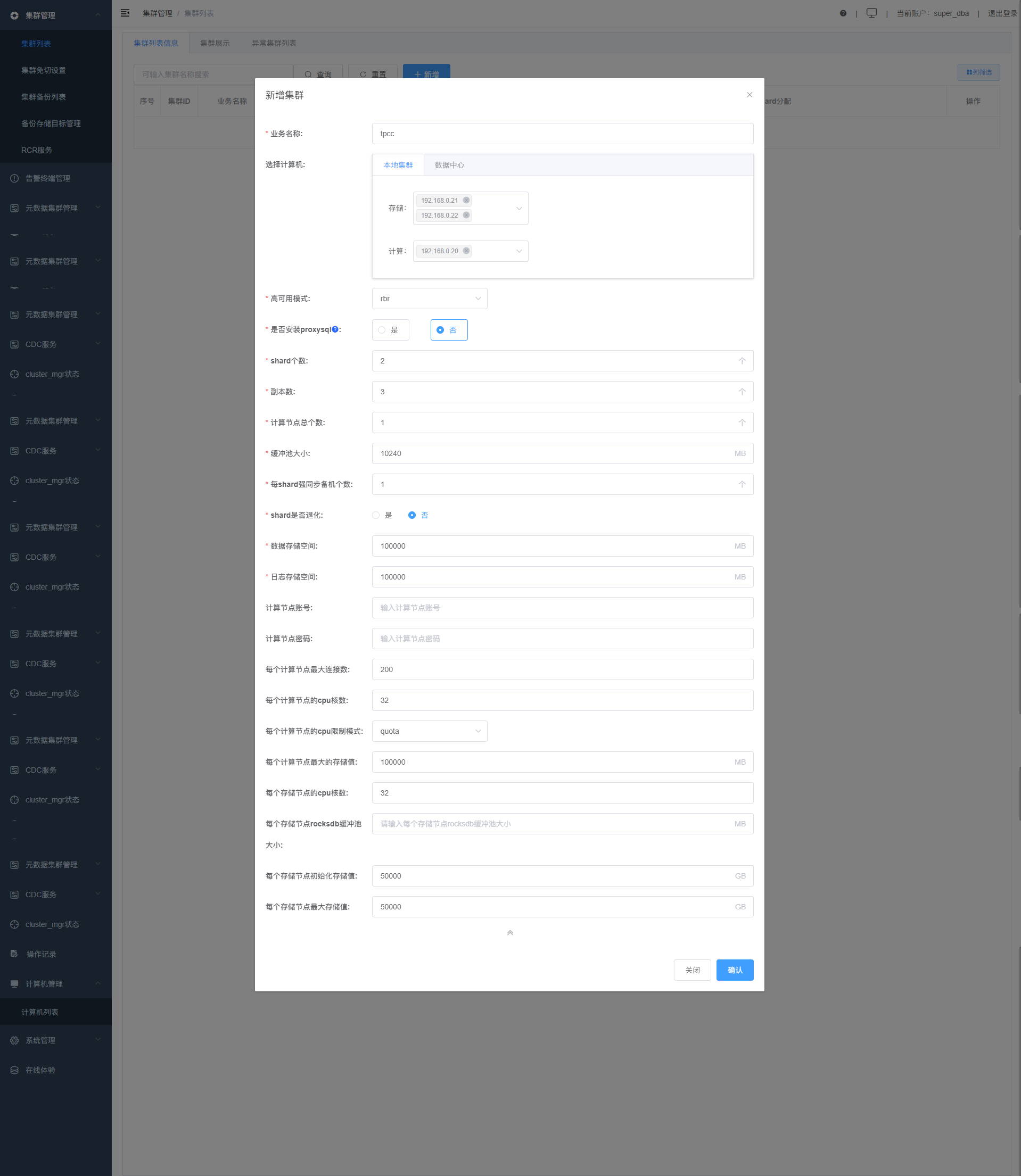

附用于测试创建的集群参数设定

2.1.2. 修改计算节点及存储节点的参数

计算节点(PG)重点参数:

shared_buffers = 34359738368 (%25 RAM)

statement_timeout=6000000

mysql_read_timeout=1200

mysql_write_timeout=1200

lock_timeout=1200000

log_min_duration_statement=1200000

effective_cache_size = 68719476736 (%50 RAM)

work_mem = 8MB

wal_buffers = ‘64MB’

autovacuum=false

psql -h 192.168.0.20 -p 47001 -U abc postgres

alter system set shared_buffers='32GB';

alter system set statement_timeout=6000000;

alter system set mysql_read_timeout=1200;

alter system set mysql_write_timeout=1200;

alter system set lock_timeout=1200000;

alter system set log_min_duration_statement=1200000;

alter system set effective_cache_size = '64GB';

alter system set work_mem = '8MB';

alter system set wal_buffers='64MB';

alter system set autovacuum=false;

alter system set log_min_messages='DEBUG1' ; #需要debug sql时开启

alter system set enable_sql_log=on; #需要debug sql 时开启

pg_ctl restart -D /nvme2/kunlun/server_datadir/47001

存储节点(MYSQL)重点参数:

innodb_buffer_pool_size=32*1024*1024*1024

lock_wait_timeout=1200

innodb_lock_wait_timeout=1200

fullsync_timeout=1200000

enable_fullsync=false

innodb_flush_log_at_trx_commit=2

sync_binlog=0

max_binlog_size=1*1024*1024*1024

mysql -h 192.168.0.21 -P 57003 -upgx -ppgx_pwd

mysql -h 192.168.0.22 -P 57005 -upgx -ppgx_pwd

set global innodb_buffer_pool_size=32*1024*1024*1024;

set global lock_wait_timeout=1200;

set global innodb_lock_wait_timeout=1200;

set global fullsync_timeout=1200000;

set global enable_fullsync=false;

set global innodb_flush_log_at_trx_commit=2;

set global sync_binlog=0;

set global max_binlog_size=1*1024*1024*1024;

set global enable_fullsync=off; #所有shard主节点关闭全同步

set enable_fullsync=off; #所有shard主节点关闭全同步

set global general_log=on ; #需要debug SQL时打开

免切设置为10800秒(Xpanel)

元数据集群MySQL MGR 3节点参数调整:

export LD_LIBRARY_PATH=/nvme2/kunlun/instance_binaries/storage/57001/Klustron-storage-1.2.1/lib:$LD_LIBRARY_PATH ;

/nvme2/kunlun/instance_binaries/storage/57001/Klustron-storage-1.2.1/bin/mysql --connect-expired-password -S/nvme2/kunlun/storage_logdir/57001/mysql.sock -uroot -proot

set global innodb_buffer_pool_size=1*1024*1024*1024; (缺省128M)

2.1.3. 调整BenchmarkSQL的配置,以适应Klustron数据的架构。

修改BenchmarkSQL 的建表脚本(对表进行分区,指定分片)

cd benchmarksql5.0/run/sql.common

cat << EOF > tableCreates.sql

create table bmsql_config (

cfg_name varchar(30) primary key,

cfg_value varchar(50)

);

create table bmsql_warehouse (

w_id integer not null,

w_ytd decimal(12,2),

w_tax decimal(4,4),

w_name varchar(10),

w_street_1 varchar(20),

w_street_2 varchar(20),

w_city varchar(20),

w_state char(2),

w_zip char(9)

) partition by hash(w_id);

create table bmsql_warehouse_0 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_warehouse_1 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_warehouse_2 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_warehouse_3 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_warehouse_4 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_warehouse_5 partition of bmsql_warehouse for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_district (

d_w_id integer not null,

d_id integer not null,

d_ytd decimal(12,2),

d_tax decimal(4,4),

d_next_o_id integer,

d_name varchar(10),

d_street_1 varchar(20),

d_street_2 varchar(20),

d_city varchar(20),

d_state char(2),

d_zip char(9)

) partition by hash(d_w_id);

create table bmsql_district_0 partition of bmsql_district for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_district_1 partition of bmsql_district for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_district_2 partition of bmsql_district for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_district_3 partition of bmsql_district for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_district_4 partition of bmsql_district for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_district_5 partition of bmsql_district for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_customer (

c_w_id integer not null,

c_d_id integer not null,

c_id integer not null,

c_discount decimal(4,4),

c_credit char(2),

c_last varchar(16),

c_first varchar(16),

c_credit_lim decimal(12,2),

c_balance decimal(12,2),

c_ytd_payment decimal(12,2),

c_payment_cnt integer,

c_delivery_cnt integer,

c_street_1 varchar(20),

c_street_2 varchar(20),

c_city varchar(20),

c_state char(2),

c_zip char(9),

c_phone char(16),

c_since timestamp,

c_middle char(2),

c_data varchar(500)

) partition by hash(c_w_id);

create table bmsql_customer_0 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_customer_1 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_customer_2 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_customer_3 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_customer_4 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_customer_5 partition of bmsql_customer for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create sequence bmsql_hist_id_seq;

create table bmsql_history (

hist_id integer,

h_c_id integer,

h_c_d_id integer,

h_c_w_id integer,

h_d_id integer,

h_w_id integer,

h_date timestamp,

h_amount decimal(6,2),

h_data varchar(24)

) partition by hash(h_c_w_id);

create table bmsql_history_0 partition of bmsql_history for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_history_1 partition of bmsql_history for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_history_2 partition of bmsql_history for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_history_3 partition of bmsql_history for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_history_4 partition of bmsql_history for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_history_5 partition of bmsql_history for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_new_order (

no_w_id integer not null,

no_d_id integer not null,

no_o_id integer not null

) partition by hash(no_w_id);

create table bmsql_new_order_0 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_new_order_1 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_new_order_2 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_new_order_3 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_new_order_4 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_new_order_5 partition of bmsql_new_order for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_oorder (

o_w_id integer not null,

o_d_id integer not null,

o_id integer not null,

o_c_id integer,

o_carrier_id integer,

o_ol_cnt integer,

o_all_local integer,

o_entry_d timestamp

) partition by hash(o_w_id);

create table bmsql_oorder_0 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_oorder_1 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_oorder_2 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_oorder_3 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_oorder_4 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_oorder_5 partition of bmsql_oorder for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_order_line (

ol_w_id integer not null,

ol_d_id integer not null,

ol_o_id integer not null,

ol_number integer not null,

ol_i_id integer not null,

ol_delivery_d timestamp,

ol_amount decimal(6,2),

ol_supply_w_id integer,

ol_quantity integer,

ol_dist_info char(24)

) partition by hash(ol_w_id);

create table bmsql_order_line_0 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_order_line_1 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_order_line_2 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_order_line_3 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_order_line_4 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_order_line_5 partition of bmsql_order_line for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

create table bmsql_item (

i_id integer not null,

i_name varchar(24),

i_price decimal(5,2),

i_data varchar(50),

i_im_id integer

) with (shard = all);

create table bmsql_stock (

s_w_id integer not null,

s_i_id integer not null,

s_quantity integer,

s_ytd integer,

s_order_cnt integer,

s_remote_cnt integer,

s_data varchar(50),

s_dist_01 char(24),

s_dist_02 char(24),

s_dist_03 char(24),

s_dist_04 char(24),

s_dist_05 char(24),

s_dist_06 char(24),

s_dist_07 char(24),

s_dist_08 char(24),

s_dist_09 char(24),

s_dist_10 char(24)

) partition by hash(s_w_id);

create table bmsql_stock_0 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 0) with (shard = 1);

create table bmsql_stock_1 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 1) with (shard = 1);

create table bmsql_stock_2 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 2) with (shard = 1);

create table bmsql_stock_3 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 3) with (shard = 2);

create table bmsql_stock_4 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 4) with (shard = 2);

create table bmsql_stock_5 partition of bmsql_stock for values with (MODULUS 6, REMAINDER 5) with (shard = 2);

alter table bmsql_warehouse add constraint warehouse_pkey primary key (w_id);

alter table bmsql_district add constraint district_pkey primary key (d_w_id, d_id);

alter table bmsql_customer add constraint customer_pkey primary key (c_w_id, c_d_id, c_id);

create index bmsql_customer_idx1 on bmsql_customer (c_w_id, c_d_id, c_last, c_first);

alter table bmsql_oorder add constraint oorder_pkey primary key (o_w_id, o_d_id, o_id);

create unique index bmsql_oorder_idx1 on bmsql_oorder (o_w_id, o_d_id, o_carrier_id, o_id);

alter table bmsql_new_order add constraint new_order_pkey primary key (no_w_id, no_d_id, no_o_id);

alter table bmsql_order_line add constraint order_line_pkey primary key (ol_w_id, ol_d_id, ol_o_id, ol_number);

alter table bmsql_stock add constraint stock_pkey primary key (s_w_id, s_i_id);

alter table bmsql_item add constraint item_pkey primary key (i_id);

EOF

mv indexCreates.sql indexCreates.sql_old

mv foreignKeys.sql foreignKeys.sql.bak

修改:/home/kunlun/benchmarksql-5.0/run/sql.postgres/extraHistID.sql ,将

```sql

alter table bmsql_history add primary key (hist_id);

修改为:

alter table bmsql_history add primary key (hist_id,h_c_w_id);

mv /home/kunlun/benchmarksql-5.0/run/sql.postgres/buildFinish.sql /home/kunlun/benchmarksql-5.0/run/sql.postgres/buildFinish.sql.bak

2.1.4. 在Klustron数据中创建测试库bench和测试用户bench

psql -h 192.168.0.20 -p 47001 -U abc postgres

postgres=# create user bench with password 'bench';

postgres=# create database bench with owner bench ;

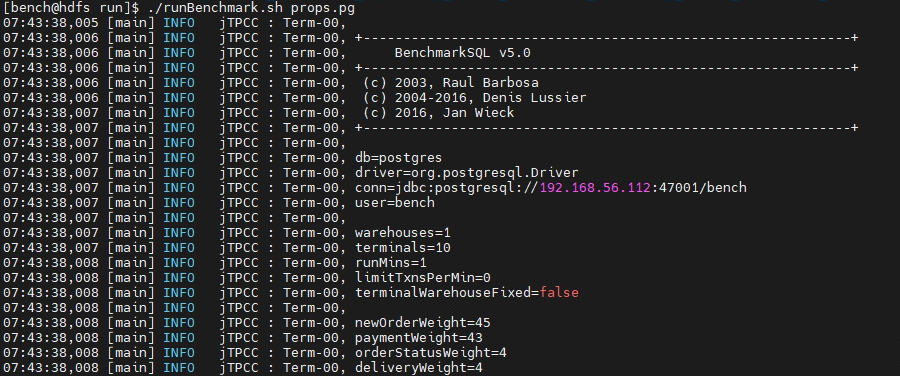

2.1.5. 修改benchmarksql5.0/run/props.pg的参数

db=postgres

driver=org.postgresql.Driver

conn=jdbc:postgresql://192.168.0.20:47001/bench

user=bench

password=bench

warehouses=50

loadWorkers=50

terminals=100

//To run specified transactions per terminal- runMins must equal zero

runTxnsPerTerminal=0

//To run for specified minutes- runTxnsPerTerminal must equal zero

runMins=30

//Number of total transactions per minute

limitTxnsPerMin=0

//Set to true to run in 4.x compatible mode. Set to false to use the

//entire configured database evenly.

terminalWarehouseFixed=false

//The following five values must add up to 100

//The default percentages of 45, 43, 4, 4 & 4 match the TPC-C spec

newOrderWeight=45

paymentWeight=43

orderStatusWeight=4

deliveryWeight=4

stockLevelWeight=4

// Directory name to create for collecting detailed result data.

// Comment this out to suppress.

resultDirectory=my_result_%tY-%tm-%td_%tH%tM%tS

osCollectorScript=./misc/os_collector_linux.py

osCollectorInterval=1

osCollectorSSHAddr=kunlun@192.168.0.21

osCollectorDevices=net_enp4s0 blk_ nvme1n1

2.1.6. 执行测试数据生成

cd benchmarksql5.0/run/

./runDatabaseBuild.sh props.pg

2.1.7. 对测试数据表及列做一次分析,收集统计信息。

将 tableCreates.sql拷贝至计算节点,并在当前目录下编辑一个名为ana.sh的脚本,如下:

for i in `cat ./tableCreates.sql | grep 'create table' | grep -v partition | awk '{print $3}'`

do

echo "select * from $i limit 1;" > a.sql

clomun=`psql -h 192.168.0.20 -p 47001 -U bench bench -f a.sql | sed -n 1p | sed 's/|/,/g'`

analyze="analyze $i($clomun)"

echo $analyze

echo $analyze > tmp.sql

psql -h 192.168.0.20 -p 47001 -U bench bench -f tmp.sql

done

运行该脚本完成对所有测试表的统计分析。

2.1.8. 对所有存储节点做一次事务清理,编辑一个名为xa.sh 的脚本 ,内容如下:

echo 'xa recover'> tmp.sql

echo $1

for i in `mysql -h 192.168.0.$1 -ppgx_pwd -upgx -P$2 < tmp.sql | awk '{print $4}' | sed '1d'`

do

printf "xa rollback \'$i\'" > tmp.sql

echo "========$i========"

mysql -h 192.168.0.$1 -ppgx_pwd -upgx -P$2 < tmp.sql

done

将其拷贝到存储节点shard1(192.168.0,21), shard2(192.168.0.22) ,并输入对应的参数,完成脚本执行。

例: sh xa.sh 21 57003

2.2. 进行测试

执行 runBenchMark.sh props.pg, 将有类似如下信息输出:

_

压测完成后,在当前目录会生成类似:my_result_2023-04-16_074338 的目录

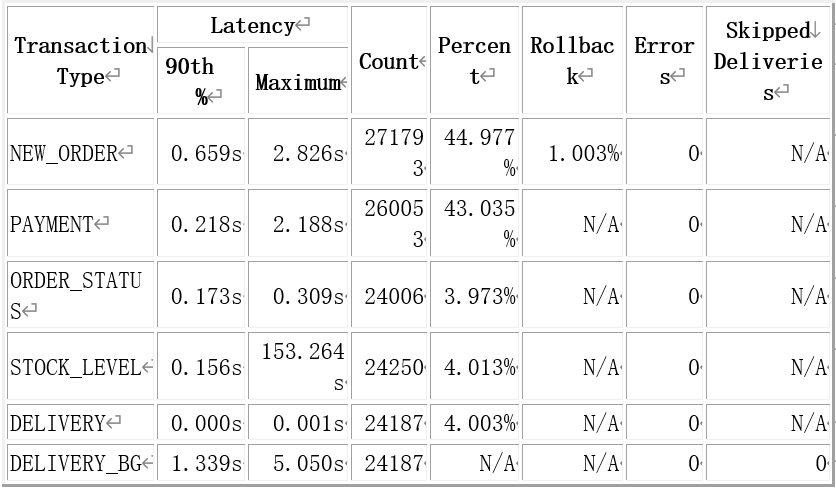

2.3. 测试报告生成及结果阅读

执行generateReport.sh < my_result目录>将生成html分析报告,以当前配置的压测用例。(warehouses=50,terminals=100,runMins=30**)**在报告文件中获得的关键输出信息如下:

| Overall tpmC: | 9059.77 |

|---|---|

| Overall tpmTotal: | 20142.97 |

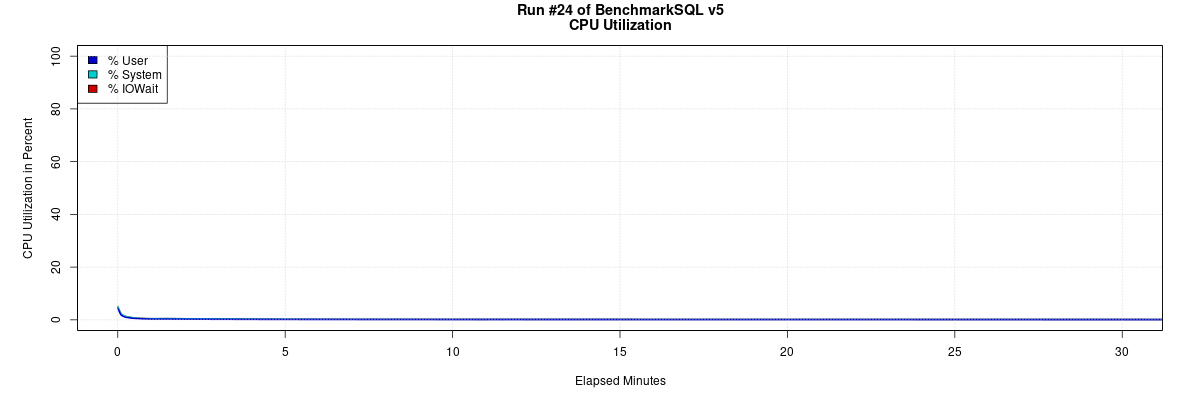

Klustron存储节点(192.168.0.21)所在机器的负载监测情况如下:

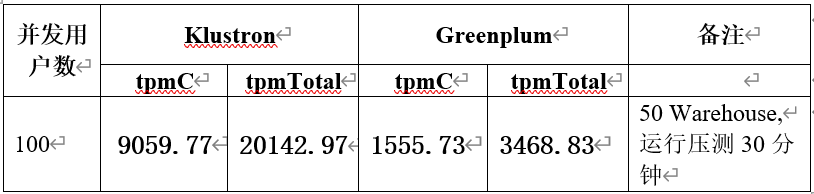

03 TPCC性能测试对比结果汇总

50warehouses 对比

结果分析

从上述性能数据可以看出KunlunBase 比Greenplum在TPCC方面有巨大的性能优势,这种优势在实际使用场景中主要体现在持续流式数据更新的速度,KunlunBase比Greenplum要更快,而这具有重大的现实意义和价值。

一个典型的场景是数据更新从多个业务系统的数据库持续流入,并且从诸如APP和网页埋点数据流入,此时流入速度如果跟不上数据产生的速度就会出现分析库中的数据版本举例数据源越拉越远的问题。

另一类场景是周期性地汇聚其他数据源中的数据,此时数据更新速度直接决定了灌入数据的速度。如果数据更新的灌入速度太低,而用户业务量增长导致需要灌入的数据量反而越来越大,就会导致数据灌入的时耗会越来越大。

由于Greenplum的数据存储在PostgreSQL中,而PostgreSQL的存储引擎的固有弱点导致其不得不经常做VACUUM来收回存储空间,这个VACUUM 在处理一个数据表是需要锁表,这就会导致数据表在较长时间无法用于更新,对用户来说这通常是无法忍受的缺陷。