部署HDFS 单节点作为Klustron备份存储

大约 3 分钟

部署HDFS 单节点作为Klustron备份存储

HDFS 单机部署模式仅仅用于用户内部测试时,便利快速地安装HDFS。在生产系统部署HDFS时,必须部署和配置HDFS高可用(HA)集群,方法详见此文

01 安装java环境

安装过程略。

验证方式:

[root@kunlun ~]# java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

[root@kunlun ~]#

02 整体目录结构

2.1. 上传hadoop-2.7.3.tar.gz至服务器/home/kunlun目录下并解压

[root@master kunlun]# tar -zxvf hadoop-2.7.3.tar.gz

[root@master kunlun]# mv hadoop-2.7.3 hadoop

[root@master kunlun]# chown -R kunlun:kunlun /home/kunlun/hadoop

[root@master kunlun]# ll

drwxr-xr-x 9 kunlun kunlun 149 Sep 6 2022 hadoop

03 配置环境变量

[root@kunlun hadoop]# cat ~/.bashrc

# .bashrc

# User specific aliases and functions

alias rm='rm -i'

alias cp='cp -i'

alias mv='mv -i'

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

export HADOOP_HOME=/home/kunlun/hadoop

export HADOOP_CONF_DIR=/home/kunlun/hadoop/etc/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

[root@kunlun hadoop]#source ~/.bashrc

[root@kunlun hadoop]# hadoop

Usage: hadoop [--config confdir] [COMMAND | CLASSNAME]

CLASSNAME run the class named CLASSNAME

or

where COMMAND is one of:

fs run a generic filesystem user client

version print the version

jar <jar> run a jar file

note: please use "yarn jar" to launch

YARN applications, not this command.

checknative [-a|-h] check native hadoop and compression libraries availability

distcp <srcurl> <desturl> copy file or directories recursively

archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive

classpath prints the class path needed to get the

credential interact with credential providers

Hadoop jar and the required libraries

daemonlog get/set the log level for each daemon

trace view and modify Hadoop tracing settings

Most commands print help when invoked w/o parameters.

[root@kunlun hadoop]#

04 修改配置文件

[root@kunlun hadoop]# ls

capacity-scheduler.xml hadoop-env.sh httpfs-env.sh kms-env.sh mapred-env.sh ssl-server.xml.example

configuration.xsl hadoop-metrics2.properties httpfs-log4j.properties kms-log4j.properties mapred-queues.xml.template yarn-env.cmd

container-executor.cfg hadoop-metrics.properties httpfs-signature.secret kms-site.xml mapred-site.xml.template yarn-env.sh

core-site.xml hadoop-policy.xml httpfs-site.xml log4j.properties slaves yarn-site.xml

hadoop-env.cmd hdfs-site.xml kms-acls.xml mapred-env.cmd ssl-client.xml.example

[root@kunlun hadoop]# pwd

/home/kunlun/hadoop/etc/hadoop

[root@kunlun hadoop]#

- core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.0.135:9000</value>

</property>

<!-- 指定hadoop临时目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/kunlun/hadoop/data/tmp</value>

</property>

</configuration>

- hdfs-site.xml

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

- hadoop-env.sh

[root@master hadoop]# pwd

/home/kunlun/hadoop/etc/hadoop

[root@master hadoop]# vim hadoop-env.sh

# The java implementation to use.

export JAVA_HOME=/usr/java/latest/ //这里配置JAVA_HOME

05 格式化

[root@kunlun hadoop]# hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

21/03/04 11:18:08 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost/127.0.0.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

STARTUP_MSG: classpath = /home/kunlun/hadoop/etc/hadoop:/home/kunlun/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/home/kunlun/hadoop/sh...

...

...

21/03/04 11:18:09 INFO common.Storage: Storage directory /home/kunlun/hadoop/data/tmp/dfs/name has been successfully formatted.

21/03/04 11:18:09 INFO namenode.FSImageFormatProtobuf: Saving image file /home/kunlun/hadoop/data/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

21/03/04 11:18:09 INFO namenode.FSImageFormatProtobuf: Image file /home/kunlun/hadoop/data/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 351 bytes saved in 0 seconds.

21/03/04 11:18:09 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

21/03/04 11:18:09 INFO util.ExitUtil: Exiting with status 0

21/03/04 11:18:09 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost/127.0.0.1

************************************************************/

06 通过脚本启动hdfs

[root@kunlun sbin]# ./start-dfs.sh

Starting namenodes on [192.168.0.135]

192.168.0.135: starting namenode, logging to /home/kunlun/hadoop/logs/hadoop-root-namenode-kunlun.out

localhost: starting datanode, logging to /home/kunlun/hadoop/logs/hadoop-root-datanode-kunlun.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/kunlun/hadoop/logs/hadoop-root-secondarynamenode-kunlun.out

[root@kunlun sbin]# jps

3409 NameNode

3973 DataNode

4725 SecondaryNameNode

9150 Jps

[root@kunlun hadoop]# netstat -antlp | grep 50070

tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 3409/java

[root@kunlun bin]# ./hdfs dfs -mkdir /user

[root@kunlun bin]# ./hdfs dfs -mkdir /user/dream

[root@kunlun bin]# hadoop dfs -ls /

hadoop fs -ls /

Found 2 items

drwxr-xr-x - summerxwu supergroup 0 2022-04-15 14:42 /kunlun

-rw-r--r-- 1 summerxwu supergroup 1367639799 2022-07-10 11:20 /xx.tgz

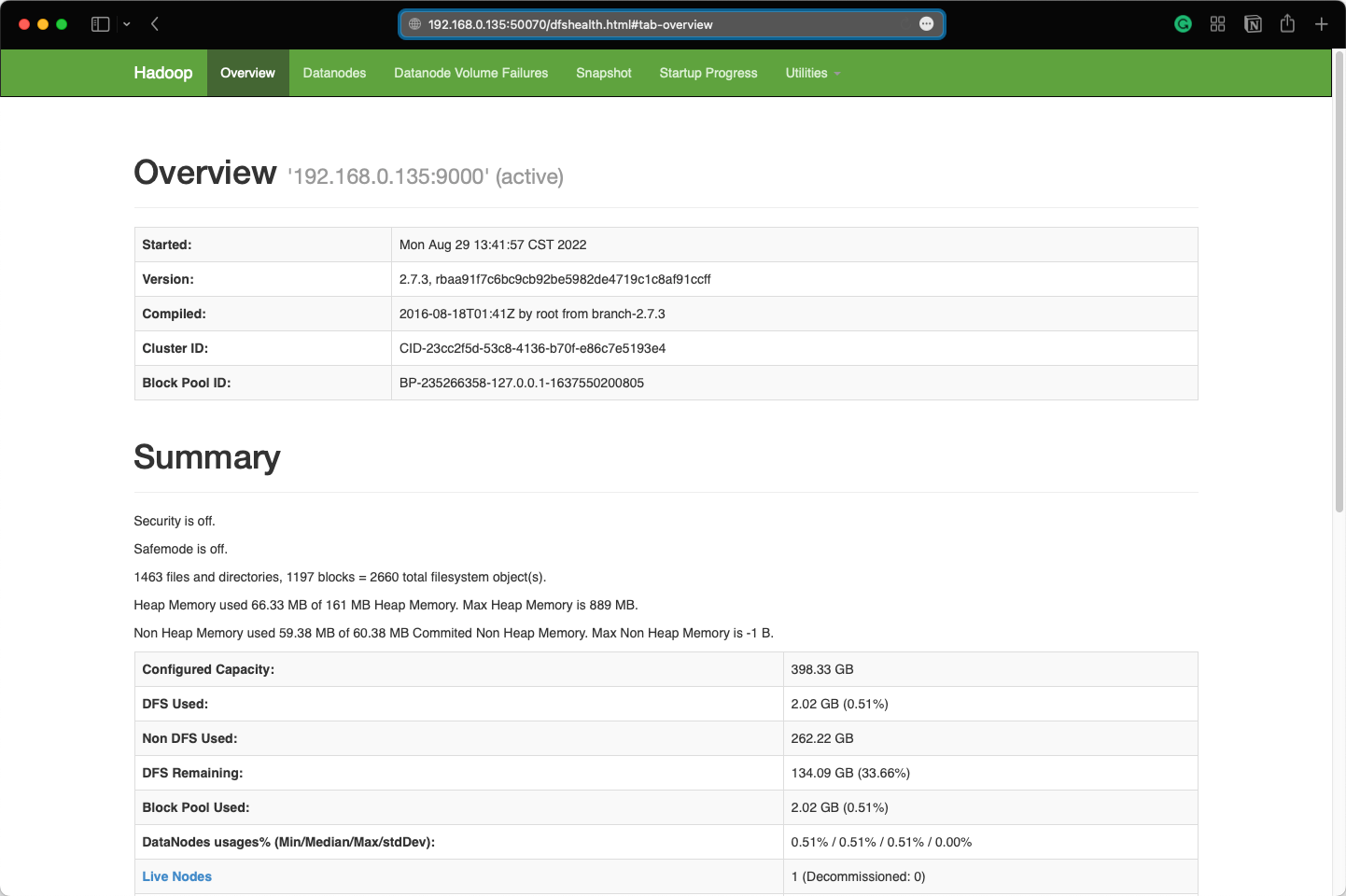

07 浏览器验证